The following steps are for an example to submit a job for neural-net fit to 'N' number of kinematic settings in the data set (where N is an integer reflects to the range of kinematic settings which you will input in the sbatch command to submit the job).

1. Make sure that Prof. Keller has added you to both the spin and spinquest groups in Rivanna.

2. Copy the sample files from the following Rivanna folder "/project/ptgroup/ANN_scripts/Rivanna_test_code_for_ANN"

$ cd /project/ptgroup/ANN_scripts/Rivanna_test_code_for_ANN

Here are the list of file that you need to have in your work directory:

Definitions →

BHDVCStf.py

Lorentz_Vector.py

TVA1_UU.py

Data file → dvcs_xs_May-2021_342_sets.csv

Main file → Full_ML_fit_evaluation_Set2.py

Job submission file → Job.slurm

3. Change the path(s) in the following files

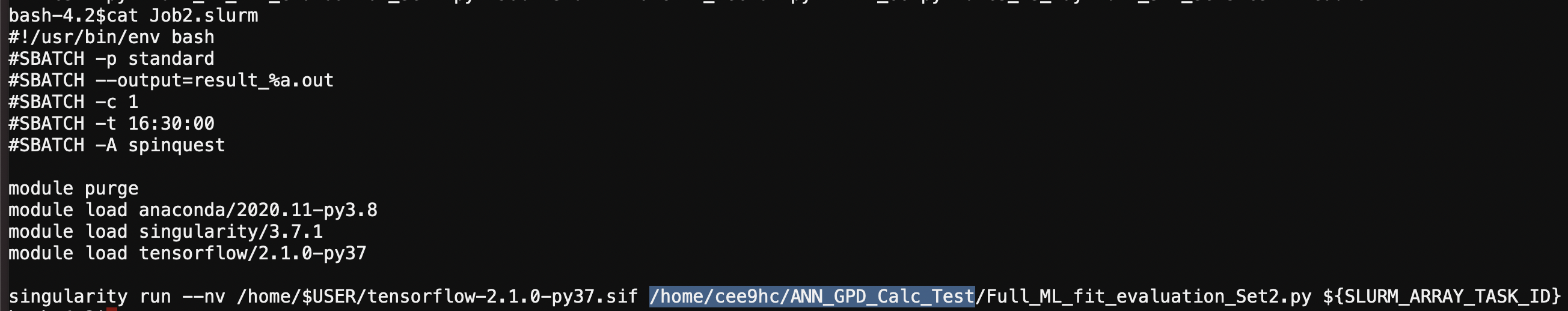

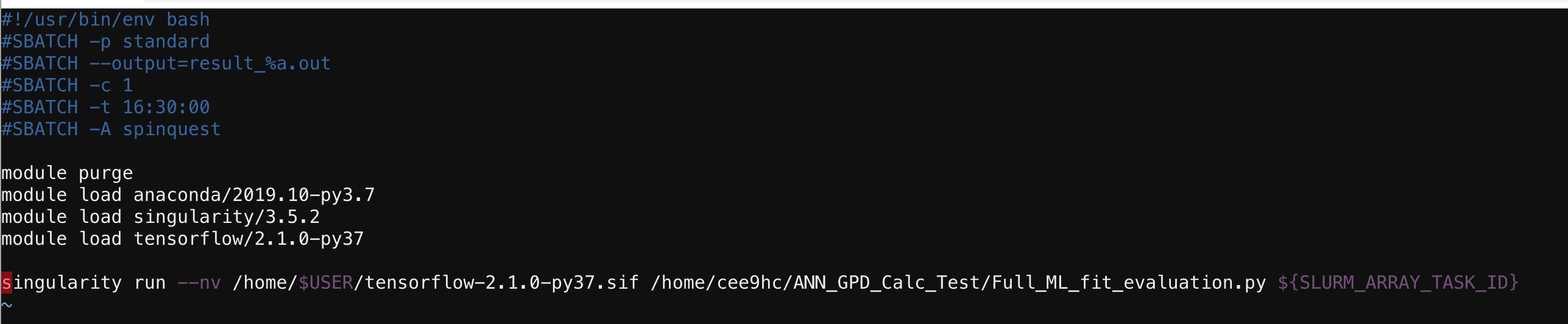

3.1) Highlighted line in "Job.slurm" file (please see below) with the correct path of 'your files'

3.2) Similarly update the paths on "Full_ML_fit_evaluation_Set2.py" file

Line numbers → 22, 31, 154

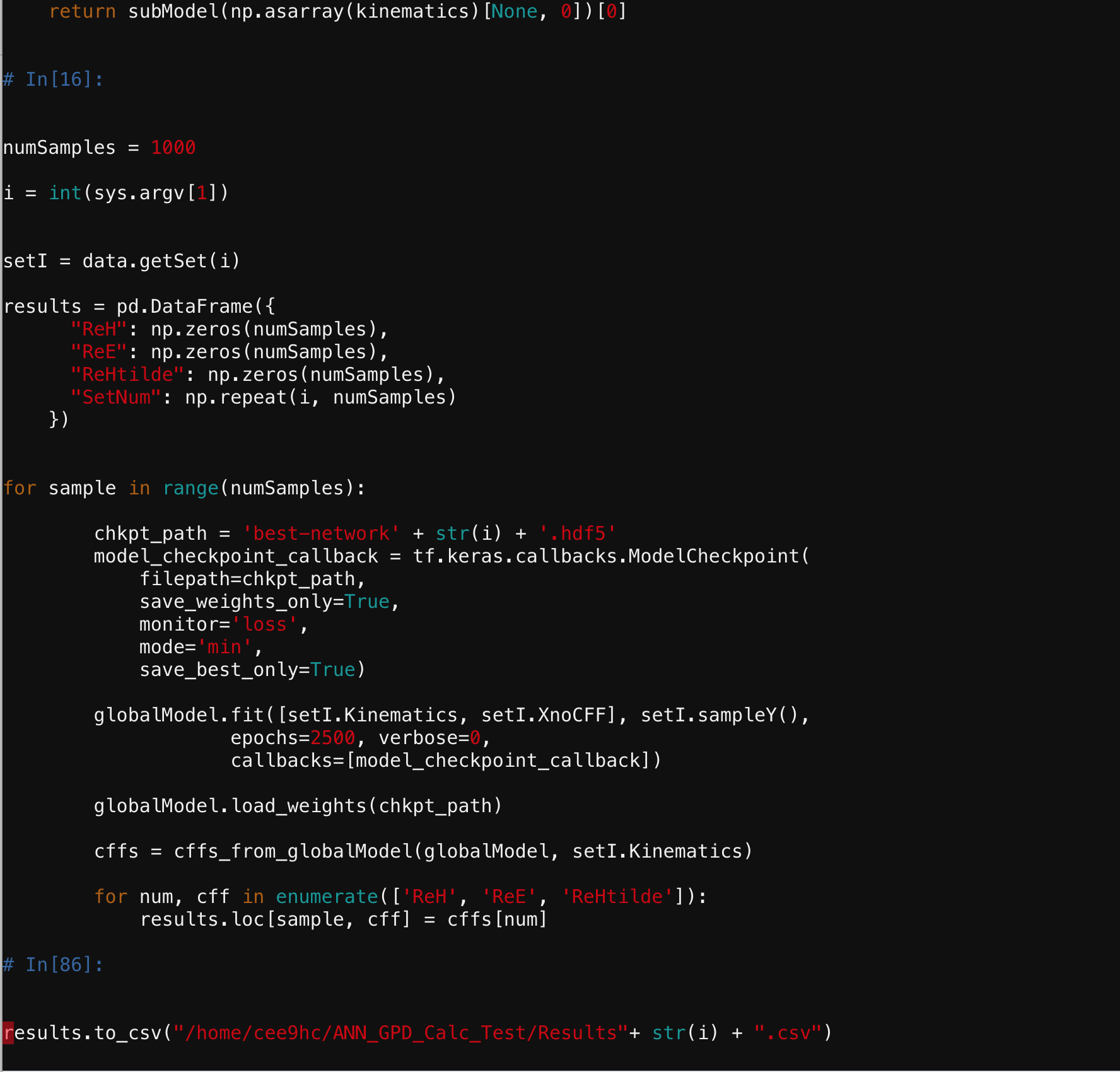

4. (This step is not necessary to check the code running, but to speed up the testing) For a quick test, you can change the "number of samples" to a small number to test (in other words "number of replicas") which is in line number 115: 'numSamples = 1000'

5. Run the following commands on your terminal

$ module load anaconda/2020.11-py3.8

$ module load singularity/3.7.1

$ module load tensorflow/2.1.0-py37

$ cp $CONTAINERDIR/tensorflow-2.1.0-py37.sif /home/$USER

(make sure that you have the same module loads included in your Job.slurm file

6. Run the following command

$ sbatch --array=0-14 Job.slurm

Note: Here 0-14 means the number of kinematic settings that you want to run in parallel (this is parallelization of local fits), and as a part of the output you will see Results#.csv (where # is an integer number) files which contain distributions of Compton Form Factors (CFFs) from each (individual) local fit.

-------------------------------------------------- check below for earlier/previous instructions-------------------------------------------

(updated in December 2020)

------------------------------

Here are some recent instructions for running jobs on Rivanna (by Nick & Ishara):

1. Make sure that Prof. Keller has added you to both the spin and spinquest groups in Rivanna. Without both groups, you will not be able to gain access to the system. There are two ways of accessing Rivanna (https://www.rc.virginia.edu/userinfo/rivanna/login/); you can follow either step (2) or step (3) mentioned below.

The files you need for this particular example, can be found in the following links (make sure to modify the path in the files, with the appropriate one).

https://github.com/extraction-tools/ANN/tree/master/Rivanna_Example_Code

or

https://myuva.sharepoint.com/:f:/r/sites/as-physics-poltar/Shared%20Documents/ANN%20Extraction/Generic_Example_Files?csf=1&web=1&e=olyqru

or you can copy the files from the following Rivanna folder

/project/ptgroup/ANN_scripts/Rivanna_test_code_for_ANN

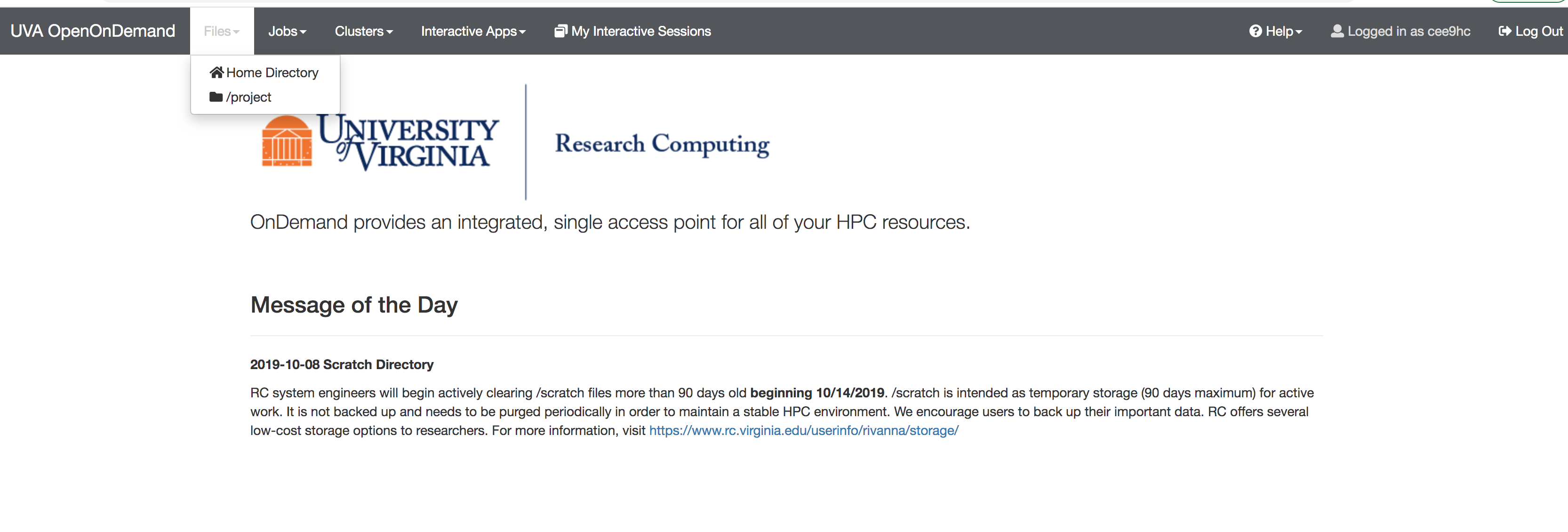

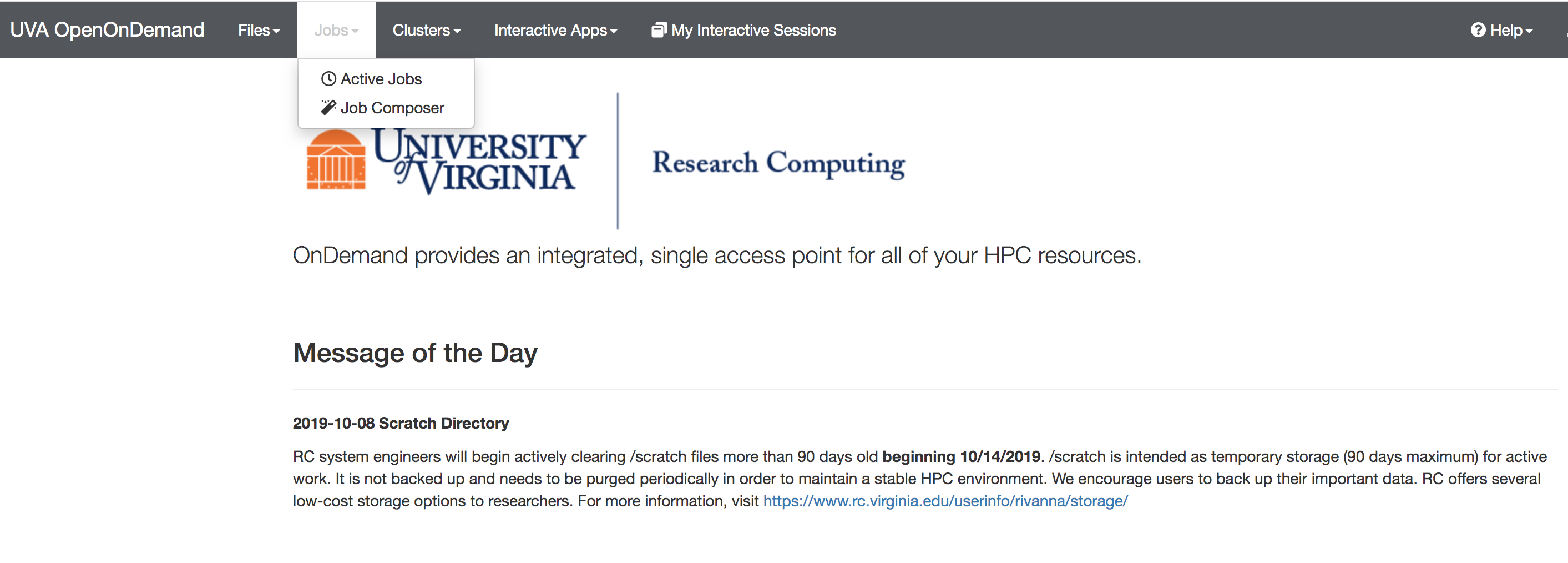

2. Web-based Access (Click on "Launch OpenOndemand" > You will need your UVA computing ID and password to log in)

- You can navigate to your "Files", "Jobs", "Clusters", etc. via the menu bar (see the above image)

- You can create directories/folders inside your "home" directory, and upload your files using the "Upload" option in the menu bar.

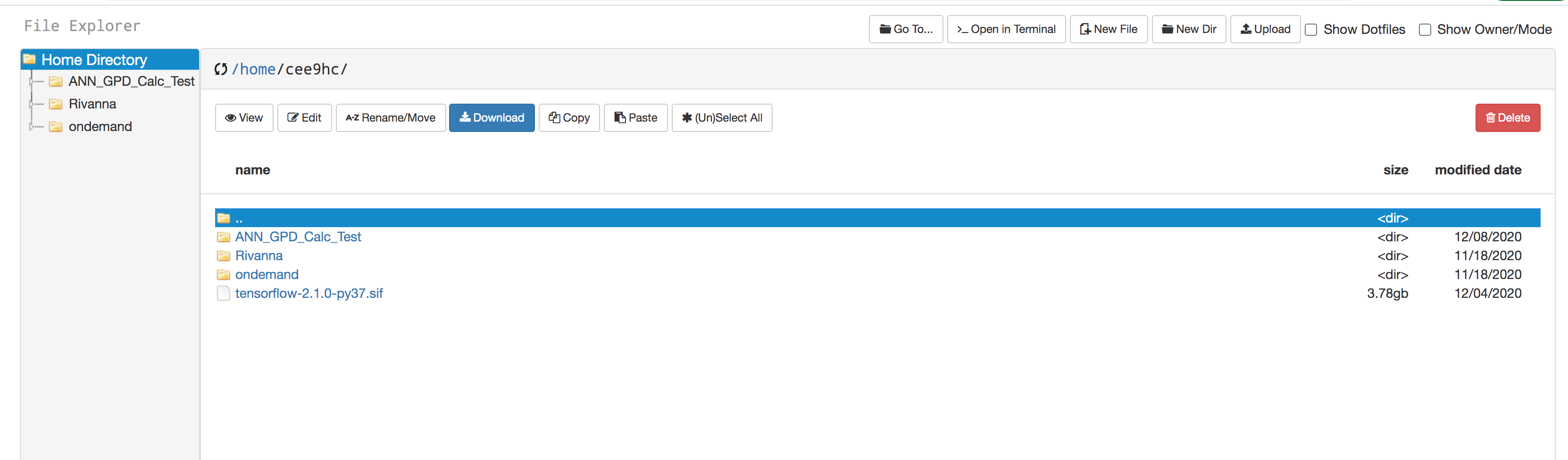

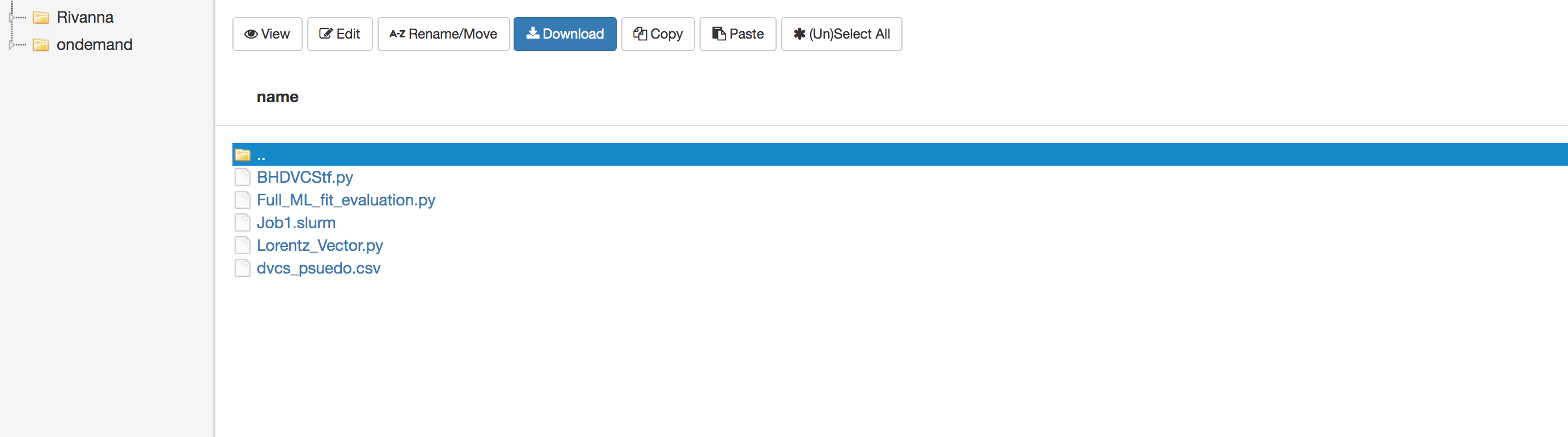

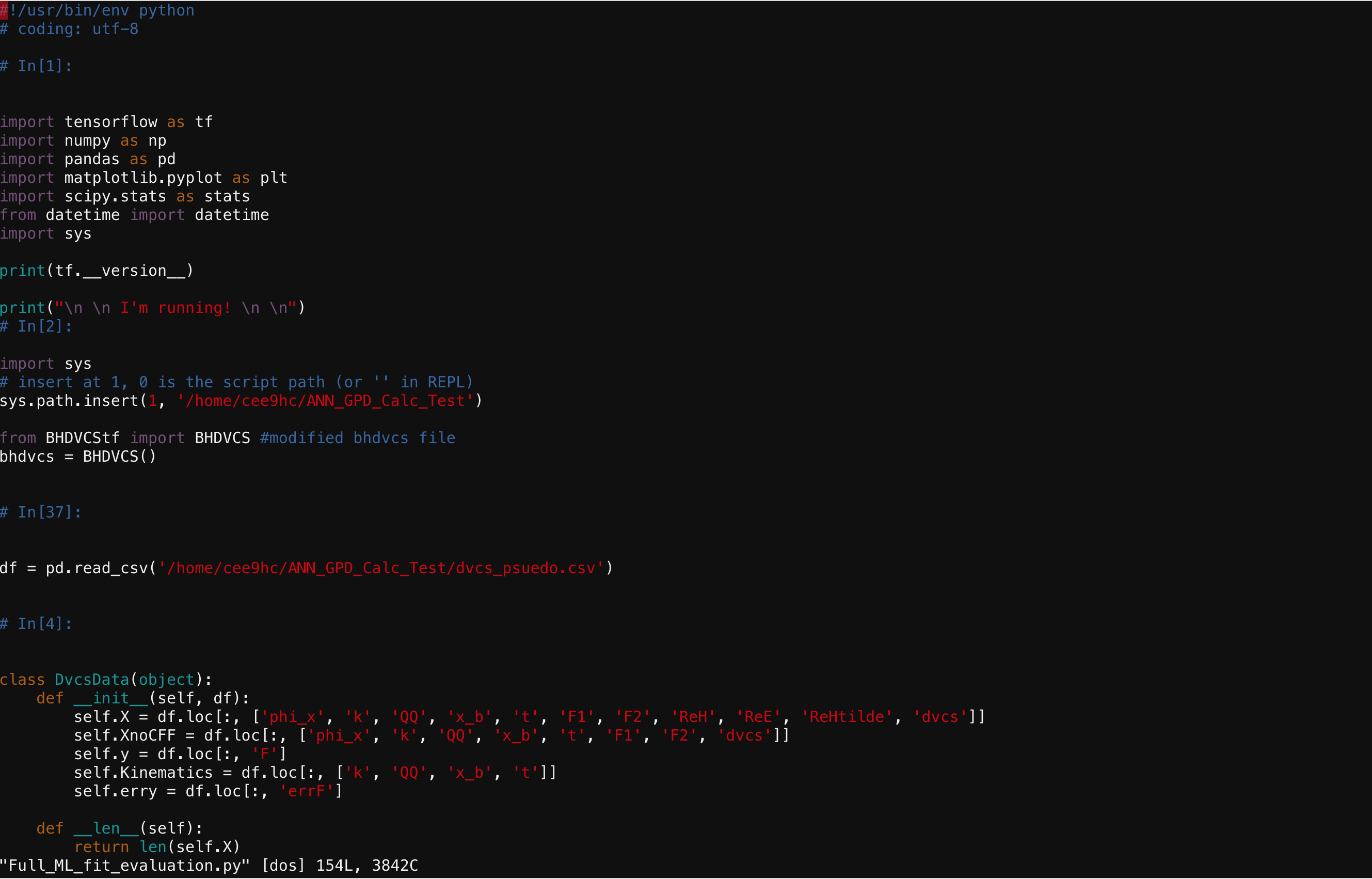

- For this specific example, you will need the files (in the working directory) shown in the following screenshot

- The files that needed to be modified are Full_ML_fit_evaluation.py, Job1.slurm (or Jbb2.slurm) with the correct paths. See the following screen-shots as an example: Notice the paths in "red".

Note: please check the anaconda module available on Rivanna and replace that version with the first line in the code-snippet above.

- In order to run SLURM or interactive jobs (ijobs), it is best practice to create a local copy of the container image in your own /home or /scratch directory. Initially (most probably) you will not see the file "tensorflow-2.1.0-py37.sif" file in your home directory. If so, "click" on the ">_Open in Terminal" on the menu bar; a new tab on your web browser will show up with a terminal environment and issue this commands:

module load anaconda/2020.11-py3.8

module load singularity/3.7.1

module load tensorflow/2.1.0-py37

cp $CONTAINERDIR/tensorflow-2.1.0-py37.sif /home/$USER

- You may modify parameters such as the learning rate and numSamples with specific values in the Full_ML_fit_evaluation.py .

- If all the above steps are completed, then issue the command: sbatch --array=0-14 Job1.slurm (please note that this "Job1" is an example, you can have your own filename). If there are no error messages, then you should be able to see your job(s) running, using the Jobs tab > Active Jobs (see the following screen-shot)

3. Secure Shell Access:

- You will need a UVA's VPN in order to SSH to Rivanna. Follow this link and follow the instructions on the page to download and configure the VPN. There are three types of network accesss available: "UVA More Secure Network", "UVA Anywhere", and "High Security VPN". "UVA More Secure Network" would be the prefered one, but "UVA Anywhere" would also work if "UVA More Secure Network" is not available.

- Open a UNIX terminal and connect to Rivanna with the command "ssh -Y mst3k@rivanna.hpc.virginia.edu" (replacing mst3k with your computing id). The password is the same as your UVA netbadge password. If you are not now at the Rivanna command line check that the step(1) + above steps are successfully completed.

- To move the code and associated resources into your Rivanna directory, you can use secure copy from another terminal. The resources you need for running the code are the same as with the Colab notebook, except that it is necessary to run a pure python file as opposed to a notebook on Rivanna. I have uploaded a python version of the same code to the Github. One additional resource that was not previously necessary is the bash script to run the Rivanna job, called as "Job1.slurm" for this example. You will need to make sure the locations/paths mentioned in your program files contain your change computing ID. Additionally, within the python file you will want to change the learning rate and numSamples parameters to the specified values.

- Run the job with the command "sbatch --array=0-14 Job1.slurm". For more information about this command see this link. This will run all the kinematic sets simultaneously for however many replicas you specified with the numSamples parameter. For 1000 replicas, the process may take up to six or seven hours. If you desire to run only a handful of kinematic sets for 1000 replicas that can be done much more quickly but requires some slight changes to the code (The replicas would be parallelized instead of the kinematic sets. In fact, both could be parallelized, but Rivanna prevents you from running more than a few thousand jobs simultaneously). Let me know if that is the case and I can edit the code for you.

4. Once the job is started, you will see a job ID in your Rivanna terminal. You can monitor the progress of your job by navigating the the "Jobs" page on your web browser (see fig 1 above).

5. The results of the replicas will be in your home directory of Rivanna under the name Results(0-14).csv. These can be sent back to your local system for analysis with the scp command or downloading via the OpenOnDemand